The main goal of global transaction ID is to make it easy to promote a new master and switch all slaves over to continue replication from the new master. This is currently harder than it could be, since the current replication position for a slave is specified in coordinates that are specific to the current master, and it is not trivial to translate them into the corresponding coordinates on the new master. Global transaction ID solves this by annotating each event with the global transaction id which is unique and universal across the whole replication hierarchy.

In addition, there are at least two other main goals for MariaDB global transaction ID:

- Make it easy to setup global transaction ID, and easy to provision a new slave into an existing replication hierarchy.

- Fully support multi-source replication and other similar setups.

Replication streams

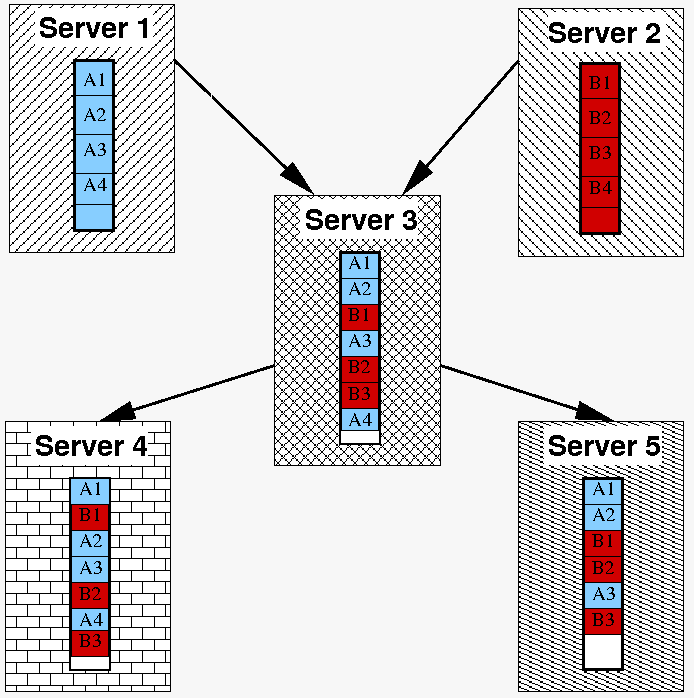

Let us consider the second point first, dealing with multi-source replication. The figure shows a replication topology with five servers. Server 3 is a slave with two independent masters, server 1 and server 2. Server 3 is in addition itself a master for two slaves server 4 and server 5. The coloured boxes A1, A2, … and B1, B2, … denote the binlogs in each server.

When server 3 replicates events from its two master servers, events from one master are applied independently from and in parallel with events from the other master. So the events from server 1 and server 2 get interleaved with each other in the binlog of server 3 in essentially arbitrary order. However, an important point is that events from the same master are still strictly ordered. A2 can be either before or after B1, but it will always be after A1.

When the slave server 4 replicates from master server 3, server 4 sees just a single binlog stream, which is the interleaving of events originating in server 1 and server 2. However, since the two original streams are fully independent (by the way that multi-source replication works in MariaDB), they can be freely applied in parallel on server 4 as well. This is very important! It is already a severe performance bottleneck that replication from one master is single-threaded on the slaves, so replicating events from multiple masters also serially would make matters even worse.

What we do is to annotate the events with a global transaction ID that includes a tag that identifies the original source. In the figure, this is marked with different colours, blue for events originating in server 1, and red for server 2. Server 4 and server 5 are then free to apply a “blue” event in parallel with a “red” event, and such parallel events can thus end up in different order in the binlogs in different places in the replication hierarchy. So every server can have a distinct interleaving of the two original event streams, but every interleaving respects the order within a single original stream. In the figure, we see for example that A2 comes before B1 in server 3 and server 5, but after in server 4, however it is always after A1.

This concept of having a collection of distict binlog streams, each strictly ordered but interleaved with each other in a relaxed way, is very powerful. It allows both great flexibility (and hence opportunity for parallelism) in applying independent events, as well as simple representation of the state of each replication slave at each point in time. For each slave, we simply need to remember the global transaction ID of the last event applied in each independent stream. Then to switch a slave to a new master, the master finds the corresponding places within its own binlog for each independent stream and starts sending events from the appropriate location for each stream.

For example, in the figure, we see that the state of server 4 is (A4, B3) and for server 5 it is (A3, B3). Thus we can change server 5 to use server 4 as a slave directly, as server 4 is strictly ahead of server 5 in the replication streams.

Or if we want to instead make server 5 the new master, then we first need to temporarily replicate from server 4 to server 5 up to (A4, B3). Then we can switch over and make server 5 the new master. Note that in general such a procedure may be necessary, as there may be no single server in the hierarchy that is ahead of every other server in every stream if the original master goes away. But since each stream is simply ordered, it is always possible to bring one server up ahead to server as a master for the others.

Setup and provisioning

This brings us back to the first point about making it easy to setup replication using global transaction ID, and easy to provision a new slave into an existing replication hierarchy.

To create a new slave for a given master, one can proceed exactly the same way whether using global transaction id or not. Make a copy of the master obtaining the corresponding binlog position (mysqldump --master-data, XtraBackup, whatever). Setup the copy as the new slave, and issue CHANGE MASTER TO ... MASTER_LOG_POS=... to start replication. Then when the slave first connects, the master will send last global transaction ID within each existing replication stream, and slave will thus automatically be configured with the correct state. Then if there later is a need to switch the slave to a different master, global transaction ID is already properly set up.

This works exactly because of the property that while we have potentially interleaved distinct replication streams, each stream is strictly ordered across the whole replication hierarchy. I believe this is a very important point, and essential for getting a good global transaction ID design. The notion of an ordered sequence of the statements and transactions executed on the master is the central core of MySQL replication, it is what users know and what has made it so successful despite all its limitations.

Replication domains

To implement this design, MariaDB global transaction ID introduces the notion of a replication domain and an associated domain_id. A replication domain is just a server or group of servers that generate a single, strictly ordered replication stream. Thus, in the example above, there are two domain_id values in play corresponds to the two colours blue and red. The global transaction ID includes the domain_id, and this way every event can be identified with its containing replication stream.

Another important point here is that domain_id is something the DBA configures explicitly. MySQL replication is all about the DBA having control and flexibility. The existence of independent streams of events is a property of the application of MySQL, not some server internal, so it needs to be under the control of the user/DBA. In the example, one would configure server 1 with domain_id=1 and server 2 with domain_id=2.

Of course, in basic (and not so basic) replication setups where only one master server is written to by applications at any one time, there is only a single ordered event stream, so domain_id can be ignored and remain at the default (which is 0).

Note by the way that domain_id is different from server_id! It is possible and normal for multiple servers to share the same domain_id, for example server 1 might be a slave of some higher-up master server, and the two would then share the domain_id. One could even imagine that at some point in the future, servers would have moved around so that server 2 was re-provisioned to replace server 1, it would then retain its old server_id but change its domain_id to 1. So both the blue and the red event stream would have instances with server_id=1, but domain_id will always be consistent.

It is also possible for a single server to use multiple domain IDs. For example, a DBA might configure events generated to receive as domain_id a hash of the current schema. This would be a way of declaring that transactions in distinct schemas are guaranteed to be independent, and it would allow slaves to apply those independent transactions in parallel. The slave will just see distinct streams, and apply them in parallel same way as for multi-source replication. This is similar to the multi-threaded slave that MySQL 5.6 implements. But it is more flexible, for example an application could explicitly mark a long-running transaction with a distict domain_id, and then ensure that it is independent of other queries, allowing it to be replicated in parallel and not delay replication of normal queries.

Current status

The MariaDB global transaction ID is work-in-progress, currently planned for MariaDB 10.0.

The current code is maintained on Launchpad: lp:~maria-captains/maria/10.0-mdev26. The design is written up in detail in Jira task MDEV-26, where the progress is also tracked.

Global transaction ID has already been discussed on the maria-developers mailing list. I have received valuable feedback there which has been included in the current design. But I very much welcome additional feedback, I am open to changing anything if it makes the end result better. Much of the community seems to not be using mailing lists to their full potential (hint hint!), hence this blog post to hopefully reach a wider audience that might be interested.

Kristian,

Thanks for the nice explanation. Your implementation of Global Transaction IDs looks, up to a point, like the one used by Tungsten.

What I see missing in this picture is integration with two main points in replication: crash-safe slave and parallel replication. According to the Jira task, you plan to use files to store the current GTID, and parallel replication is still pending. Now, this is where the GTID implementation in MySQL 5.6 is at its weakest point. There is no integration between GTID and the system tables used for parallel replication and crash-safe slave. Since MariaDB 10 is still

in an early development stage, would you consider planning the integration between these features early on, to avoid the same discrepancies we see today in MySQL 5.6? (Namely, there is no place for GTID in the aforementioned tables, nor in CHANGE MASTER TO, and not in the parallel workers tables)

Cheers

Giuseppe

Hi Kristian,

Very nice design. The partial ordering concept you describe is similar to how we think of this problem in Tungsten. It looks as if we can also integrate what you are doing with Tungsten Replication, which will give users two excellent choices for replication setups that should also mix together well. I have a few small points based on our experience.

1. Binlog reordering as you describe has some practical consequences in that it can make fault diagnosis far more complex, since transactions will not be in the same order in different locations. I think you may want to consider some additional options for mysqlbinlog to dump transactions from a particular server domain. This will make it easier to see sequences within particular streams of updates. Another useful feature from Tungsten is the ability to search the logs for a particular global transaction ID. We live and die by that feature in support cases.

2. Given that you have global transaction IDs, wouldn’t it makes sense to alter the CHANGE MASTER TO command to accept a GTID? Otherwise it seems as if this design does not support failover–what happens for example if you are doing the CHANGE MASTER to connect slaves to a different master whose binlog position is not commensurate? Tungsten takes a somewhat different approach here in that we assign a single GTID for each new log record. In your case you would need multiple GTIDs (I suppose) to connect from server 5 to server 3.

3. You mention the fact that there may be gaps in the global transaction ID sequence. We had some discussions of this topic early on in Tungsten and took the approach that there can never be gaps. Instead, if we drop transactions we create a Filter Event, which marks the gaps. I just bring this up because allowing gaps creates potentially ambiguous situations–you cannot tell the difference between a missing transaction and a transaction that has been filtered out for some reason. Filtering is very common in the sort of fan-in you describe in your sample. Ambiguities here lead to very gnarly bugs.

4. This is a tiny feature but it would be nice to allow server_id to be a session variable. That way we could apply it from Tungsten. Also, it would allow users to preserve the value when applying from mysqlbinlog output. I have never quite understood why that was not the case from the start.

Cheers, Robert Hodges

Thanks a lot Giuseppe and Robert for your insightful comments. I decided to

write up a full reply as a follow-up post:

https://knielsen-hq.org/w/more-on-global-transaction-id-in-mariadb/