New MariaDB/MySQL storage engines such as MyRocks and TokuDB have renewed interest in using engines other than InnoDB. This is great, but also presents new challenges. In this article, I will describe work that I am currently finishing, and which addresses one such challenge.

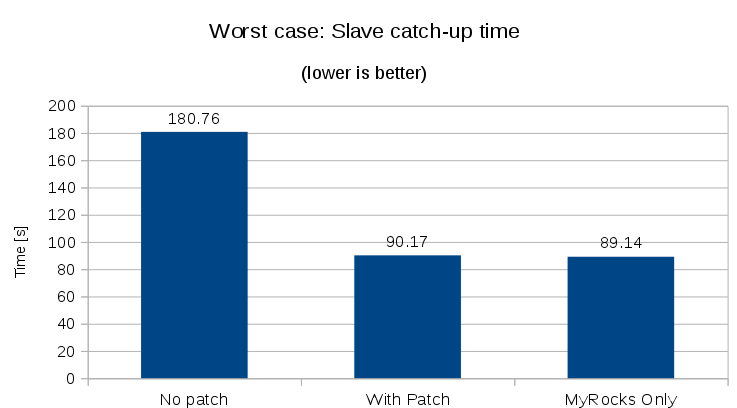

For example, the left bar in the figure shows what happens to MyRocks replication performance when used with a default install where the replication state table uses InnoDB. The middle bar shows the performance improvement from my patch.

For example, the left bar in the figure shows what happens to MyRocks replication performance when used with a default install where the replication state table uses InnoDB. The middle bar shows the performance improvement from my patch.

Current MariaDB and MySQL replication uses tables to transactionally record the replication state (eg mysql.gtid_slave_pos). When non-InnoDB storage engines are introduced the question becomes: What engine should be used for the replication table? Any choice will penalise other engines heavily by injecting a cross-engine transaction with every replicated change. Unless all tables can be migrated to the other engine at once, this is an unavoidable problem with current MariaDB / MySQL code.

To solve this I have implemented MDEV-12179, per-engine mysql.gtid_slave_pos tables, which should hopefully be in MariaDB 10.3 soon. This patch makes the server able to use multiple replication state tables, one for each engine used. This way, InnoDB transactions can update the InnoDB replication table, and eg. MyRocks transactions can update the MyRocks table. No cross-engine transactions are needed (unless the application itself uses both InnoDB and MyRocks in a single transaction).

The feature is enabled with the new option --gtid-pos-auto-engines=innodb,rocksdb. The server will automatically create the new replication tables when/if needed, and will read any such tables present at server start to restore the replication state.

Performance test

To test the impact of the new feature, I ran a sysbench write-only load on a master, and measured the time for a slave to apply the full load. The workload is using MyRocks tables, while the default mysql.gtid_slave_pos table is stored in InnoDB. The performance was compared with and without --gtid-pos-auto-engines=innodb,rocksdb. Full details of test options are available following the link at the end of the article.

Replication injects an update into a small table as part of each commit. The performance impact of this will be most noticeable for fast transactions, where the commit overhead is relatively larger. It will be particularly noticeable when durability is enabled (--innodb-flush-log-at-trx-commit=1 and similar). If another storage engine is added into a transaction, extra fsync() calls are needed in the commit step, which can be very expensive.

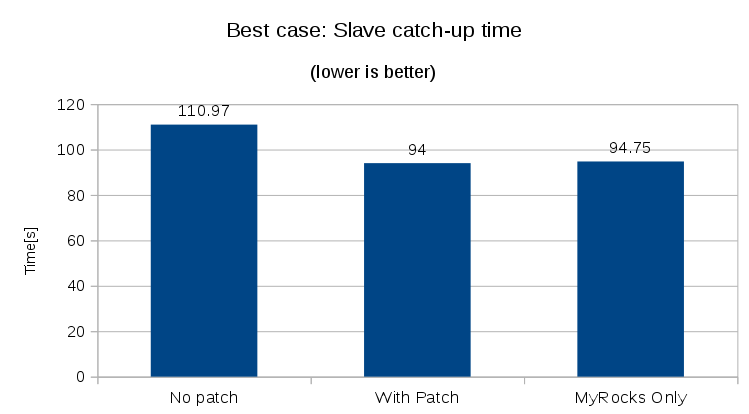

I tested the performance in two scenarios:

- A “worst case” scenario with durability/fsync enabled for binlog, InnoDB, and MyRocks, on hardware with slow fsync.

- A “best case” scenario with all durability/fsync disabled.

In the “worst case” we would hope to see substantial improvement due to reducing the number of fsync operations. In the “best case” improvements will be expected to be small, if any, though there may still be some improvement due to avoiding CPU and some I/O overhead from running the commits through two engines.

The figure at the start of the article shows the results from the “worst case”. The left bar is the time for the slave to catch up when the replication state table is using the default InnoDB storage engine. The middle bar is the time when using --gtid-pos-auto-engines=innodb,myrocks and the right bar is when the state table is changed to MyRocks (MyRocks-only load).

We see a huge speed penalty from the cross-engine transactions introduced by the InnoDB state table, the slave is twice as slow. However, with the patch, all the performance is recovered compared to MyRocks-only setup.

The test was run on consumer-grade hardware with limited I/O capabilities. I ran a small script to test the speed of fdatasync() (see link at end of article). This SATA-attached SSD can do around 120 fdatasync() calls per second, writing 16 KB blocks at random round-robin among five 1MB data files. In the “worst case” test, the load is completely disk-bound. Thus, the absolute transactions-per-second numbers are low, and the impact of the new feature is very big.

The results of the “best case” is in the following figure.  The “best case” workload is CPU-bound, disk utilisation is low. Sysbench write-only does several queries in each transaction, so commit overhead is relatively lower. Still, we see a substantial cost of replication introduced cross-engine, it runs 18% slower than the MyRocks-only case. And again, the patch is able to fully recover the performance.

The “best case” workload is CPU-bound, disk utilisation is low. Sysbench write-only does several queries in each transaction, so commit overhead is relatively lower. Still, we see a substantial cost of replication introduced cross-engine, it runs 18% slower than the MyRocks-only case. And again, the patch is able to fully recover the performance.

So I think these are really good results for the new feature. The impact for the user is low – just set a server option, and the server will handle the rest. We could eventually make InnoDB, TokuDB, and MyRocks default for --gtid-pos-auto-engines to make it fully automatic. The actual performance gain will depend completely on the workload, and the absolute numbers from these performance tests mean little, perhaps. But they do show that there should be significant potential gain in many cases, and enourmous gains in some cases.

Conclusions

I hope this feature will help experiments with, and eventual migration to, the new storage engines such as TokuDB and MyRocks. The ability to have good replication performance when different storage engines are used in different transactions (but not within a single transaction) should make it easier to experiment, without committing everything on a server to a new and unknown engine. There might even be use cases for deploying permanently on a mixed-engine setup, with different parts of the data utilising different performance characteristics of each engine.

The present work here is implemented for MariaDB only. However, there is some discussions on porting it to other MySQL variants. While the details of the implementation will differ somewhat, due to code differences in MariaDB replication, I believe a similar approach should work well in the other MySQL variants also. So it is definitely a possibility, if there is interest.

Links: